"Non-standard" head shapes mean flex circuits are a given.

"Non-standard" head shapes mean flex circuits are a given.

We've come a long way in the AR/VR space. It seems like we're going to have this stuff whether we want it at the moment or not. It's kind of like the Northwest Passage through the ice cap. It's new. We're not sure what the result looks like, but we're charging ahead with a virtual and/or augmented future.

Set the wayback machine to 1939, when both my father and the View-Master stereoscope entered the room. This wasn't long after Kodachrome was invented, so it was cutting-edge at the time. We put circular cards into the slot and could browse seven different views that somehow tricked the eye into seeing depth from isolating each eye on two similar slides (Figure 1).

Back in "real" reality, this technology still has a lot of room to grow. It was about a decade ago when virtual reality started to bubble up into the lexicon at Google. We knew that a new industry was coming into existence and wanted to at least provide a gateway to the content. A group adjacent to the Chrome team developed a product called "Cardboard" that reminded me of the View-Master.

The difference between the View-Master and Cardboard is that each eye gets an altered video instead of a slide show. Add in some audio tailored to each ear and presto, you have virtual reality. Note that companies like HTC were already trailblazing the VR space back then. It continues to set the pace with a wide range of products for consumer and enterprise applications.

Figure 1. They still make these View-Masters 85 years after introduction. (Source: Target)

Creating content for VR is pretty fascinating; not just a green screen but a green world for the actors to play in. The rigging, lighting and multiple camera placements capture action in a way that immerses the user in the synthetic worldscape. Wearing the headset for the first time can be a little disorienting.

It's hard to say exactly where all this is leading but immersive video games seem to be the essential starting place. While I was working on Daydream VR, around 2015, I had the idea of remotely touring real estate as a possible application. Spurred by the pandemic, we now have that as an option in some apartment complexes. Still, I see virtual tours and remote learning as potential VR opportunities, though Daydream itself was taken off the market in 2019.

Figure 2. Just insert a smartphone and you're in a form of virtual reality. (Source: RoadToVR.com)

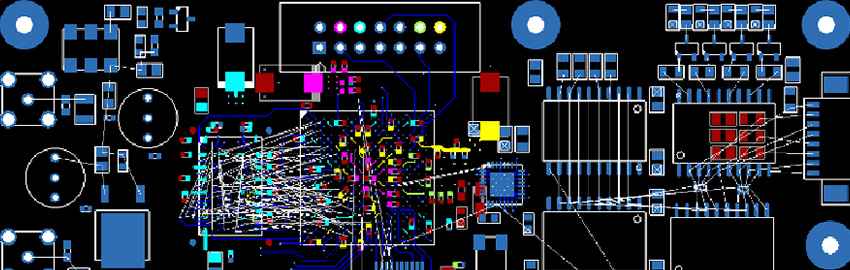

Why AR/VR is different in terms of PCB layout. Wearable technology deals with the human element, and we're not made of rectangles. The printed circuit boards that fill the volume of the headset must adapt to the shape of the average size person's head but with the means to accommodate larger and smaller people. Parts of the system must articulate as necessary.

Another aspect of streaming graphics is simply the amount of computational work that entails for the system. A device that warms your pocket is one thing. Warming your forehead is quite another. Those little flat fans that inhabit most laptops also find their way into mixed reality headsets. They are about as thick as the printed circuit board and often take up residence in a cut-out that would remind one of a nautilus shell as those fans use the same golden ratio principles to move air. Passive cooling in addition to active heat spreaders is to be expected.

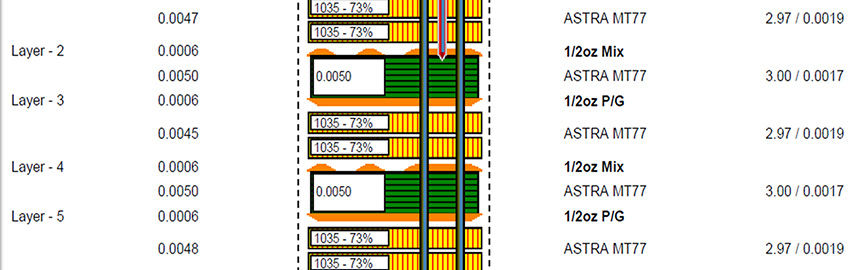

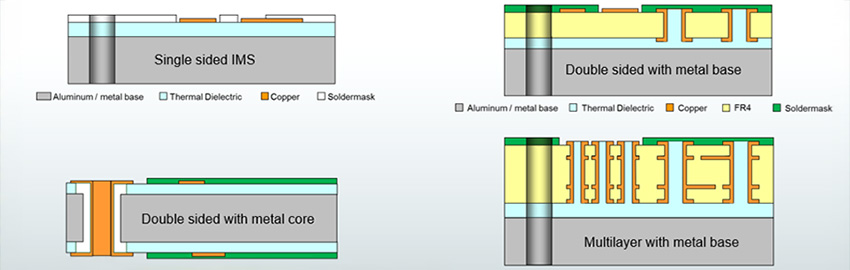

The main logic board of an AR/VR system has everything a smartphone has other than the actual phone call part. The SoC (system-on-a-chip) that went into the Daydream headset was the same SoC used in the Pixel 3 phone. I worked on the phone project just before jumping to virtual reality. Mobile chips are the thing for obvious reasons. The standard stack-up is going to be 12 layers with microvias for every layer.

Flex circuits: Endemic to mixed reality. Flex circuits get a lot of use in consumer electronics, especially with goggles and headsets. The unique anatomy each of us has means that the system must be adjustable in specific ways. Joining the HoloLens team exposed me to all the flexes that I sent off to the original design manufacturer (ODM) while at Google. That was an eye-opening experience in both flexes and rigid-flexes being way more than a purpose-built flat cable.

Think of the number of different sizes available at the optometrist. The distance between our eyes is a key factor that must be dialed in for the system to work properly. Meanwhile, our eyes are monitored by separate cameras to figure out which way we are looking while wearing the apparatus. The software uses that information to maximize the resolution of the virtual thing that you're actively observing.

The virtual and augmented worlds are all about control. Controllers usually come with the device and work in one of two ways. One uses at least two base stations in the VR space and triangulates on the headset and controller(s) using what amounts to LiDAR in the infrared band. This technology is known as the Lighthouse Tracking System and is found in HTC products. Meta, meanwhile, goes with infrared LEDs and tracks them with cameras rather than photodetectors. Its technology is called Inside Out Tracking.

The new spatial computer from Apple does away with the controllers, preferring to run off hand gestures that you would learn for different ways of manipulating items in mixed reality. I recall gestures being done on early Pixel phones. That wasn't the most popular user experience on the phone back then. Meanwhile, today's content creators have high praise for the hand tracking on the Vision Pro platform.

Credit no fewer than 12(!) cameras plus a lidar unit for the overall performance. That's even more than a Tesla. What I know about it is just from Apple's marketing material. Augmented reality is adding content to the real world. Virtual reality is closer to a video game where the world is 100% special effects.

Either way, if you have to have a controller, you want six degrees of freedom; up, down, left, right, forward and back. It should be a simple design and robust enough so that you can break the wall-mounted television with it. For some reason, that seems to be the most video-worthy failure mode of virtual reality. Does artificial intelligence want you to punch your TV right in the mouth? The less-amusing failure mode is neck strain.

When it comes to wider adoption, less is more. It's not a huge leap to conclude that the only way the industry is going to really take off is to take considerable mass away from the whole system. The various circuits that make up the entire product must be further integrated into chipsets that combine more features, and that can be hard to do, especially when it comes to the plethora of sensors.

The interoperability of the various sensors depends on board layout to some extent. Every antenna, especially the unplanned ones, creates a new dimension of coexistence issues. Simply put, one more antenna or noise source doesn't add on, it multiplies the possible emissions problems.

Just combining WiFi and Bluetooth on a single radio chip is a huge challenge. To get the most from the experience, we want the new WiFi 6 with the 60GHz band that replaces the HDMI cable. Meanwhile, everything from batteries to memory chips must shrink for this to really work for everyone.

In the marketplace, the early adopters are always the ones to pay the price for getting a new product off the launch pad. Count camcorders and DVD players among my adoptees. Maybe someday, we'll be using a device on our heads to fly though a virtual printed circuit board looking for trouble from the signal's perspective. Our virtual future will arrive in one form or another. It will take time, but not another 85 years.

John Burkhert Jr. is a career PCB designer experienced in military, telecom, consumer hardware and, lately, the automotive industry. Originally, he was an RF specialist but is compelled to flip the bit now and then to fill the need for high-speed digital design. He enjoys playing bass and racing bikes when he's not writing about or performing PCB layout. His column is produced by Cadence Design Systems and runs monthly.

"Non-standard" head shapes mean flex circuits are a given.

"Non-standard" head shapes mean flex circuits are a given.