Without the right context, AI gives answers that sound right but can quietly derail manufacturing decisions.

It’s 9:15 AM on a Tuesday, and Maria – your rising star process engineer – is about to make a $50,000 mistake.

She asked AI a simple question: “What’s the recommended cure temperature for FR-4 laminate?” The answer came back instantly, confidently: “Cure at 180°C for 90 minutes.” She’s two clicks away from updating the work instruction that will go to the production floor for today’s run of 24 panels.

Then something makes her pause. Maybe it’s the fact that 180°C seems high. Maybe it’s muscle memory from her mentor telling her to always verify. She opens the material datasheet.

The actual spec? 170°C ±5°C for 60-75 minutes. AI was close – but in PCB manufacturing, “close” means scrapped boards, delayed shipments and a very uncomfortable conversation with your customer.

Here’s what just happened: AI didn’t lie to her. It didn’t malfunction. It provided her with a statistically plausible answer based on patterns in its training data. But it lacked the specific context of her material, her process, her customer’s requirements. And without that context, even the best AI becomes a very expensive guess.

If you tried AI after reading my first article and got results that felt unreliable, you probably blamed the AI. “It hallucinates.” “It makes things up.” “I can’t trust it.”

What most people miss, however, is that AI doesn’t fail because it’s broken. It fails because we don’t give it the context it needs to succeed.

This article explains one of the most important skills for working with AI: context control. Master this, and AI transforms from an unreliable assistant into a thought partner you can trust. Skip this, and you’ll stay frustrated, or worse, you’ll stop using AI altogether and fall behind competitors who figured this out.

Let’s fix that.

Seeing Things (for the Better)

First, let’s clear up what’s happening when AI gives you wrong answers.

AI doesn’t “know” anything. It’s a pattern-matching engine trained on billions of text examples. When you ask it a question, it predicts the statistically most likely response based on patterns it has seen before. It’s incredibly good at this – but it has no understanding of truth, no access to your specific datasheets and no concept of what “Class 3 registration tolerance” means in your facility.

Think of it this way: AI without context is like a process engineer walking onto your production floor for the first time. They have general knowledge about PCB manufacturing. They’ve read the textbooks. They understand the principles. But they don’t know your equipment quirks, your material suppliers, your customer specifications or the tribal knowledge your team has built over years of production runs.

Would you trust that engineer to update work instructions on day one? Of course not. You’d spend time teaching them the context: “Here’s how we run this line. Here’s why we deviate from the standard process. Here’s what the datasheet doesn’t tell you.”

That’s exactly what you need to do with AI.

The good news? This isn’t an AI limitation – it’s a design feature. AI’s flexibility to work across domains only works because it doesn’t come preloaded with assumptions about your specific situation. Your job is to provide the context that makes its pattern-matching abilities useful.

And here’s the critical insight: the engineers who get reliable AI outputs aren’t using better AI – they’re providing better context.

This is a learnable skill/art. And once you have it, AI stops being unreliable and starts being indispensable.

The Anatomy of a Reliable Prompt: Building on CRIT

In our December 2025 article, I introduced the CRIT framework: Context, Role, Interview, Task. If you tried it, you probably got better results than you were getting before. But you might have also wondered: “How much context is enough? What should I include? What can I skip?”

Let’s refine that framework with specific guidance on what makes context actually work.

Context isn’t just background information – it’s constraint definition. When you provide context, you’re telling AI what boundaries to work within, what assumptions are valid and what success looks like in your environment.

Here’s what effective context includes:

- Specificity over generality.

Bad: “I’m working on a PCB.”

Good: “I’m designing an 8-layer HDI board with 0.4mm pitch BGAs for automotive Class 3 applications.”

Why it matters: AI has patterns for “PCB” that span everything from hobby projects to aerospace. Narrow the domain, get better answers.

- Your constraints, not industry defaults.

Bad: “What’s the recommended drill size?”

Good: “Our shop’s smallest reliable drill is 0.25mm. The customer requires 0.2mm finished holes. What drill size accounts for plating thickness?”

Why it matters: AI knows industry standards. What it doesn’t know is your equipment capabilities and limitations. That’s the context that makes answers actionable.

- The outcome format you actually need.

Bad: “Tell me about impedance control.”

Good: “Give me a 3-step troubleshooting checklist I can hand to my technician when we see impedance drift on 50-ohm traces.”

Why it matters: You’re not writing a research paper. You need outputs you can use Monday morning. Specify the format, and AI delivers it.

- What you’ve already tried (if troubleshooting).

Bad: “Why is my solder mask cracking?”

Good: “I’m seeing solder mask cracking after reflow. Already verified cure temperature (150°C × 45min), checked for contamination, and confirmed material is within shelf life. What else should I investigate?”

Why it matters: AI won’t waste time suggesting what you’ve already eliminated. And if you are unsure of what more to share, use the “Interview” step in CRIT – you’re teaching AI what you know.

Let me show you this in action with a real manufacturing scenario.

Before and after: The same question, radically different results.

Let’s say your drill breakage rate just jumped to 3.8% from 0.5% over the past two weeks. You’re running 10 mil drills through standard 63 mil FR-4, and suddenly you’re breaking 7-8 drills per shift instead of 1-2. That’s costing you money in drill replacement, machine downtime and scrapped panels. You decide to ask AI for help.

Version 1: The vague prompt. “Why are my drills breaking?”

AI’s response:

“Drill breakage can result from multiple factors: improper feed rates, spindle speed issues, dull bits, material hardness variations, inadequate chip evacuation, entry material condition or backup board quality. I’d recommend reviewing your drill parameters and conducting a systematic process audit to identify the root cause.”

Is that helpful? Technically accurate, sure. But you already knew all that. You just got a textbook answer that doesn’t move you forward. You’re back to square one, and drills are still breaking.

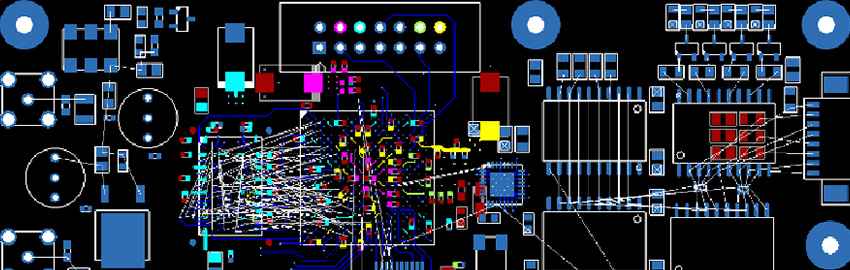

We have a free vector graph simulation of this on our website.

Version 2: The context-rich prompt. Now, let’s apply the four context principles:

CONTEXT: I’m running a Schmoll drill machine on a Class 3 multilayer board. Standard 63 mil FR-4 material, 10 mil drill diameter, 8-layer stack. Breakage rate was stable at 0.5% for six months. Two weeks ago, it jumped to 3.8% and hasn’t recovered. Drills are breaking at depth (approximately 40-47 mils into the stack), not at entry or exit.

ROLE: Act as an expert PCB drill engineer who has prior experience at a laminate supplier and a drill bit distributor

SPECIFICS: Using carbide micro-grain drills, same supplier/lot as past 3 months. Spindle speed 120k RPM, feed rate 80 ipm (chip load 0.33 mils/flute). Breaking occurs randomly across the panel, not concentrated in any specific zone. Running 18-up panelization.

CONSTRAINTS: I’ve already verified spindle runout (within 0.2 mils), confirmed coolant flow and pressure, checked entry/backup board condition, and validated drill program parameters haven’t changed. Tried fresh drills from new box – same breakage rate. Material is from the same supplier, but could be a different lot.

INTERVIEW: Ask me questions (ONE AT A TIME) to provide you with more context in order to accomplish the task.

TASK: Provide three specific root-cause hypotheses ranked by probability, with a test I can run THIS WEEK to confirm or eliminate each one. I need to get breakage back under 1%.

AI’s response: Note: this is the real AI output.

“Given the sudden onset, depth-specific breakage pattern, and the fact that you’ve eliminated tooling and machine variables, here are three hypotheses ranked by likelihood:

- **Chip evacuation degradation**

- Test: Inspect vacuum system integrity. Check if you’ve had recent increase in hole count per panel that’s generating more swarf. Examine broken drill tips under scope for evidence of chip packing.

- Why: Poor chip evacuation at depth causes heat buildup and drill binding. Two-week timeframe could align with a pipe leak or accumulated contamination.

- Action: Inspect vacuum system lines for leaks or blockages. Clear any accumulated swarf from vacuum ports. If breakage improves immediately, implement more frequent vacuum system maintenance checks.

- **Spindle bearing wear causing vibration at depth **

- Test: Run vibration analysis on spindle at operating RPM. Check for frequency spikes. If unavailable, drill test pattern at reduced RPM (100k) and see if breakage rate changes.

- Why: Two weeks suggests gradual bearing degradation. Vibration becomes more pronounced at depth where drill deflection is maximum. Spindle runout might be within spec at rest but develops harmonic vibration under load.

- Action: Check spindle service hours. If approaching PM interval (typically 1500-2000 hours for micro-drilling), schedule bearing replacement.

- **Wrong Material**

- Test: Request Certificate of Conformance for current material lot vs. previous lot. Inspect internal quality records. Compare Tg and resin system specs. Alternatively, run identical drill program on a panel from old material lot if you have archive samples.

- Why: Breakage at 40-47 mil depth suggests the drill is encountering harder material mid-stack where glass fabric density is highest. Higher Tg material would increase cutting forces at that depth.

- Action: As Tg increases, reduce feed rate 15-20% and monitor breakage.

Start with material lot verification takes 30 minutes. If C of C shows material change, the feed rate adjustment test can run today.”

See the difference?

Version 2 gave you an action plan you can execute this week. It prioritized hypotheses based on your specific process and equipment. It didn’t waste time on things you’d already eliminated (e.g., drill program, entry material). It recognized the urgency (a five-day deadline to return to <1%) and provided the fastest test first (30 minutes to check material certificate of conformance).

Same AI. Same “question.” Completely different value.

Figure 1. A moment of pause at the work instruction screen shows why AI answers without context can turn “almost right” into an expensive manufacturing mistake.

When to Trust AI (and When to Verify)

Getting reliable outputs doesn’t mean blindly trusting everything AI tells you. It means knowing when AI’s strengths align with your needs, and when you need to verify.

Here’s a practical framework for commercial AI that most SMB use.

AI excels at (generally trustworthy):

- Pattern recognition and analysis: “Review this failure data and identify trends.”

- Drafting and formatting: “Convert my meeting notes into a status report.”

- Brainstorming and alternatives: “Give me five ways to approach this design constraint.”

- Explaining concepts: “Explain impedance matching like I’m teaching a new technician.”

- Process documentation: “Create a troubleshooting checklist for this equipment.”

AI struggles with (always verify):

- Math and calculations: AI is pattern-based, not computational. Use it for explanation, not calculation.

- Solution 1: Use coding-capable AI (Claude Code, ChatGPT with Code Interpreter) to write and execute Python scripts for calculations.

- Solution 2: Ask AI to explain the formula, then verify the calculation yourself or use a spreadsheet.

- Solution 3: Have AI generate the calculation logic, but always verify the math with a second method.

- Current facts or data: It doesn’t browse the internet every time.

- Solution 1: Ensure the AI you’re using has web search enabled with source citations for information less than 1 year old. Add “use internet” in your prompt to enforce.

- Solution 2: Use AI to draft the query, then manually search databases (e.g., Digi-Key) for pricing.

- Solution 3: Ask AI for the approach/methodology, then plug in current data yourself from verified sources.

- Your specific procedures: It doesn’t know your company’s actual processes unless you teach it.

- Solution 1: Upload procedures into the AI’s context window at the start of each session.

- Solution 2: Create a custom GPT or Claude Project with your company documents preloaded.

- Solution 3: Reference specific procedure documents by name in your prompt and paste relevant sections inline.

- Safety-critical decisions: Never trust AI for decisions that could cause injury or catastrophic failure without expert review.

- Solution 1: Use AI for initial analysis and recommendations, then require signoff from a qualified engineer or safety officer.

- Solution 2: Implement a “two-person rule” where AI output plus a human expert must both agree before action.

- Solution 3: Limit AI to advisory role only – it suggests options, humans make final safety-critical decisions.

- Regulatory compliance: AI can explain regulations but shouldn’t be your sole source for compliance verification.

- Solution 1: Use AI to interpret regulations, then verify against official regulatory documents or legal counsel.

- Solution 2: Have AI draft compliance checklists, then human validate each item against current standards (IPC, UL, etc.).

- Solution 3: Employ AI for gap analysis of your processes but confirm findings with a compliance expert or auditor.

The 30-Second Verification Habit

For anything that matters, run this quick check before acting on AI’s output:

- Sanity test: Does this answer make intuitive sense based on your experience? If something feels off, it probably is.

- Source check: For any specific claim, number or specification, can you verify it against a datasheet, standard, or known reference? If the AI indicates “cure at 180°C,” verify the material specification until you trust the AI’s use.

- Risk assessment: What happens if this answer is wrong? If the consequence is high (e.g., production impact, customer relationships, safety), verify it with a second source or a subject-matter expert.

This isn’t about distrusting AI, it’s about using it intelligently. You wouldn’t implement a process change based solely on one engineer’s opinion, even an expert. You’d verify. Apply the same standard to AI.

The goal is confident use, not blind faith.

What You Do Tomorrow Morning

Here’s your assignment: Pick one task you’re facing this week. It could be a quality issue, a design decision, documentation you need to create or a process you’re trying to optimize.

Don’t just ask AI about it. Use what you learned in this article:

Tomorrow’s prompt template:

CONTEXT: [Your specific situation - be detailed]

- What are you working on?

- What constraints are you operating within?

- What’s the current state vs. desired state?

SPECIFICS: [The exact details that matter]

- Measurements, part numbers, specifications

- Your equipment/material/process specifics

- Timeline or urgency if relevant

ALREADY TRIED: [What hasn’t worked]

- What have you already eliminated?

- What approaches have you tested?

TASK: [The exact output format you need]

- Be specific about format (checklist, analysis, options, etc.)

- Include any deadline or urgency

- Specify level of detail needed

[Your specific question here]

That’s it. Use this template once tomorrow. See what happens.

You’ll get better results than you did before you read this article. Not because AI got smarter – because you did.

And here’s what you’ll discover: context control isn’t just about getting better AI outputs. It’s about clarifying your own thinking. The act of defining context forces you to articulate the problem more precisely. Sometimes, just writing the context-rich prompt helps you see the solution before AI even responds.

That’s the real skill you’re building here. It’s not prompting – it’s structured problem-solving.

Start tomorrow. One prompt. Full context. Build the habit.

In the next article in this series, we’ll build on this foundation and show you how to move from chatting with AI to automating workflows – taking the prompts you’ve mastered and making them work for you in the background while you focus on higher-value problems. That’s Pillar 2: AI in Business Workflows.

But first: practice your context control. You can’t automate what you haven’t mastered manually.

About this Series

This is the second article in a series exploring practical AI adoption for PCB design and manufacturing professionals. We’re building on the Three Pillars framework from article 1:

Article 1: Don’t Buy AI, Learn AI (Pillar 1 foundation - why learn vs. buy)

Article 2: Context is King (Pillar 1 core skill - getting reliable outputs) ← You are here

Coming next: Building workflow automations without coding (Pillar 2).

This isn’t about buying AI solutions – it’s about developing your team’s AI capabilities.

Sean Patterson is an accomplished executive with extensive C-suite experience across CRO, COO, and CTO roles who now specializes in humanizing artificial intelligence implementation in business environments, particularly manufacturing; This email address is being protected from spambots. You need JavaScript enabled to view it..

Patterson’s unique approach to AI implementation stems from his multifaceted leadership experience in the PCB industry, including serving as COO and CTO & head of AI at Summit Interconnect, various senior positions at TTM Technologies, and CRO of Nano Dimension. He built Amazon’s tractor trailer division and healthcare platforms. He currently serves as COO of StartGuides, providing military technology working backwards from the soldier. He is also on several nonprofit AI advisory boards in education.

Patteson brings practical insights into how PCB manufacturers can approach AI adoption strategically. His methodology emphasizes cultural adoption from the top, employee empowerment, and then automation. His approach to AI implementation is captured in his often-quoted principle: “AI adoption is not something a leader can delegate.”

Patterson holds a master’s in nuclear science and engineering from MIT and a bachelor’s in systems engineering with a focus on robotics from the United States Naval Academy. He is keynoting the PCB Management Session: Strategic Leadership in the Age of AI, New Technology Adoption, and Talent Scarcity, at PCB East in April.