An electronics startup is developing AI-driven design software that lessens manual intervention.

The role of artificial intelligence in PCB design is a growing topic of debate throughout the industry, with discussions ranging from the previous inadequacies of autorouting, the best methods for training it, and its potential to replace human designers.

We spoke in June with Sergiy Nesterenko, founder and CEO of a new software company called Quilter, whose goal is to accelerate hardware development by fully automating circuit board design. The former SpaceX engineer discussed why he thinks the margins designers build in are excessive and how Quilter’s AI-driven, physics-based platform can resolve and even violate some “human” rules while still generating superior printed circuit boards.

The following has been lightly edited for grammar and clarity.

Quilter sees the hard constraints of reinforcement learning as superior to supervised learning AI training methodologies.

Mike Buetow: As we dive further into the world of artificial intelligence in electronics design, we have to come to grips with the fundamental hangup many designers have with regard to turning over routing and parts placement of their boards to the CAD tool. Now, some designers point out that the autorouters have been part of their tools for decades, and they are all built on some form of machine learning. But those same autorouters are often ignored in practice because they don’t complete the designs to the satisfaction of the users. That’s a fact that often gets glossed over or completely ignored by developers of AI-based platforms, yet you have acknowledged this head-on, noting in the past the problems inherent in autoroutes. Can you explain your thinking here?

Sergiy Nesterenko, founder and CEO, Quilter.

Sergiy Nesterenko: I think from my experience most people just don’t use autorouters, right? When you go to my previous company, SpaceX, and you look at any flight board, it’s a bunch of people who are choosing the stackups, who are placing components, who are routing boards and who are thinking about the physics of everything – and of course that’s time consuming. For a complicated board, that’s easily weeks or maybe even months of work, and that’s a problem. I think the motivation to automate this is basically to just go faster and to build better boards. It’s a clear need if it were to exist, and the question becomes, why doesn’t it yet exist? Why hasn’t it been built and why is everything that has been built not good enough?

Where I think autorouters kind of fall apart today is in maybe three major areas. The first is that they typically don’t tackle the entire job. Typically, you are still responsible as the user to configure the stackup. You’re typically responsible to figure out which fabricator you’re going to use and enter in the manufacturing rules. If you have any special considerations like differential pairs or impedance control, you have to encode that into basically geometry rules yourself. You typically have to do the placement yourself, and then even when the autorouter presents a layout, you have to make sure it’s actually going to work. That’s a lot of configuration and setup, and a lot of things that simply aren’t tackled by the autorouter as opposed to someone doing the full layout. The second problem is that with a board of any complexity autorouters usually just don’t complete the job. It’s very common to click on the autoroute button to see it get to, say 90% of a design, even if the design is only a couple hundred components. And then you’d think, “Oh, I only have 10% of the work left to do.” And you quickly find that the autorouter just didn’t leave you room throughout the last 10% of the traces, and you have to delete everything and restart.

The third problem I already alluded to, which is that you then have to review the autorouter’s work. Even if it completed everything, you have to look at every single trace and ask yourself, “Is the signal integrity here going to be good? Is the crosstalk here going to be too much? Did it consider my high current traces? Did it consider so and so forth?” And that is arguably just as difficult as doing the routing yourself and following the rules that you’ve built up over time.

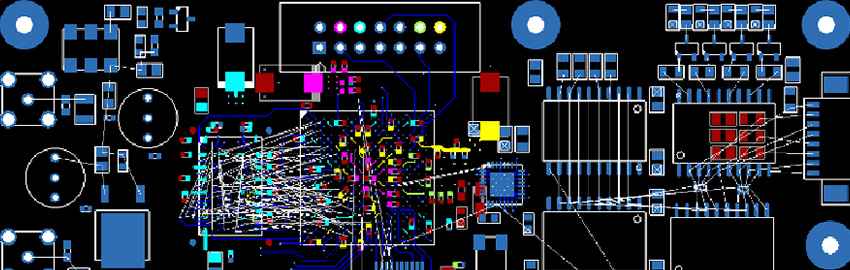

So the place that we’re coming from is that in order to achieve full automation, in order to really solve this problem, we have to solve all of those. We have to tackle the whole problem. Start with the schematic ideally and do the whole thing: identify the manufacturers, respect all of their rules and tolerances, do the placement, do the stackups, and do the full routing with all of the physics included. You have to complete the job, right? A board that’s routed to 99.9% is a broken board. Anything short of 100% is not acceptable. And you have to account for the physics. You have to show the user you’ve considered signal integrity and crosstalk and all of that stuff such that the schematic still fundamentally works.

MB: What does that schematic to layout process look like? Is there any human interaction needed here under the Quilter platform?

SN: One of the opinions we take is that we shouldn’t try to get the user away from the tools they already know and love. For example, if you’re used to Altium, we are not trying to come in and say, “Hey, stop using Altium and learn this new platform.” That would be a big lift for many organizations. Instead we ask you to basically take your Altium files as an input, drag and drop them to Quilter, we do our work and we return the Altium file to you so you can continue with reviews and generate manufacturing files the same way you would have. From the user’s perspective, what we really expect is that you’ve finished your schematic completely. At this point, we still ask the user to define the footprints, and the reason there is that a lot of companies have their own footprint libraries that are not externally shared, and so they want to have their own control over those. At that point you have a board file where the netlist and the footprints have been loaded, and then perhaps you’ve defined the outline of the board and maybe replaced some connectors where you know they have to go in a specific spot. Everything else stays off the board, and that’s what you’re inputting into Quilter. A good model for this is if you were to hire an external layout resource, like a contractor to do your layout. Think about what you would email them, and just instead of emailing that person, you’re dragging and dropping that into Quilter’s website.

MB: That’s one of the more novel aspects to this from what I understand. The user is going to pull the files from the native environment into Quilter. Will it still look like, for example, the Altium environment inside of Quilter. Does Quilter have its own user interface?

SN: Quilter has its own user interface, and it is not meant to be complicated. The main thing that Quilter has to accomplish in the conversation with the user is to understand all of the schematic, and in particular, all of the signal types that are happening throughout the schematic. One of our areas of growth is to understand where you have high currents. Where do you have high-speed? Where do you have differential pairs? Where do you have impedance control? Because that information isn’t necessarily encoded in the schematic. But after that point, Quilter’s aim is to do the layout for you. The inspiration we use internally is that doing the layout for your circuit board should feel like compiling your code. If you think about it, a schematic is a little bit like a programming language, and then a circuit board is trying to make that schematic happen in real life without screwing it up. To us, that should feel more like compiling your schematic than actually doing the layout yourself, and so the interface for Quilter is simple. For that reason, it doesn’t necessarily look like Altium or KiCad or any of the other tools.

MB: You mentioned KiCad there as another one of the CAD formats that you work with. Are there any others of note?

SN: Altium and KiCad are the main ones we support today. We actually had initially built out support for Eagle, but that seems to be shutting down for the most part, so we’re not supporting that anymore. Cadence Allegro is most likely to be the next one we add.

MB: Do you have to develop VAR agreements or any type of technology transfer or shared agreements with any of the CAD vendors in order to develop this?

SN: Thankfully, legally speaking, reading and writing common file formats is not something that we have to go get agreements for. But we like to work with the companies because it’s easier for us, and in general they’ve been supportive. Altium has been very supportive of us doing this so far and have extended some support from their engineers. We haven’t worked with somebody at Cadence yet, but if somebody’s willing to, we’d be happy to.

MB: Let’s talk about the types of boards that you have used as proof of concepts and that you’ve successfully routed today. Let’s just start at the hardest part. What’s the most complex board that you know of that’s been then that’s been designed using Quilter? Meaning how many layers, how many pins, DDR memory, or anything like that.

SN: I think of complexity roughly in two dimensions. One is just kind of the raw combinators in geometry. So that’s roughly how many pins, how dense is the board, and so on and so forth. The other is physics, like what are the most interesting signal types that you’ve captured.

Starting on the physics side, the most interesting board we’ve done to date, or at least the ones that we’ve personally brought up within Quilter, is one of the open-source projects from OpenMV. They have a really cool set of computer vision boards where they basically have an onboard camera, I think it was an STM 32 that we did, an SD card, USB, things of that nature, running onboard live computer vision for basic face detection or basic neural network on board or whatever else. That’s an example of something we have done on the slightly faster side; I think that was a 500MHz processor. We’re pushing in the next probably six to eight weeks to release support for much faster differential pairs than impedance control. That’ll allow us to get into the multi-gigahertz territory for signal integrity, which will be really fun. I’m really excited for that.

On the combinatoric side, the biggest boards that we’ve done are unfortunately not public. They’ve been for companies, and I can’t reveal them, but you can imagine big test boards. If you imagine a board that’s maybe 15"x30" or something of that nature with maybe 400 or 500 components, close to 2,000 pins and nothing super high-speed. Nothing more than a couple of amps but still just a lot of parts and a lot of things to connect. I believe both of these were four-layer boards. I think we’ve never gone so far above six layers.

MB: How long did it take for the tool to route that board?

SN: We’ve obviously improved drastically since we’ve done both of those, but both of those should be running in about two to three hours by now.

MB: Start to finish, two to three hours? In your previous life, just setting up the constraints probably took two to three days, right?

SN: Yeah, definitely. The company we did the big board for, the engineer that we worked with, estimated that that whole board would have taken them about a week to do, and that’s very much our focus. The major pain that I felt while at SpaceX with designing these boards is that layout is a bottleneck. You can’t put five people on it and get it done in a day, and so you’re waiting for your first prototype for five days or for 10 days and you’re going to have to do multiple of those iterations before you eventually actually go into production. Since it comes at the end, it becomes a critical path, and so the ability to shorten that is very valuable for a fast-moving company.

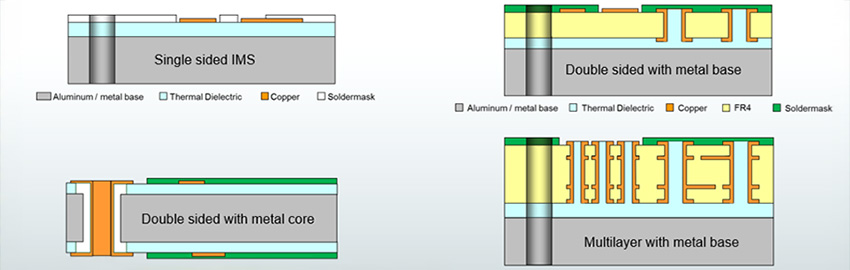

The other thing that Quilter does that’s interesting is because it’s done in software, we don’t just lay out one board, we lay out hundreds, and so we can have a two-layer, four-layer, six-layer variant across multiple different manufacturers, multiple stackups, multiple design rules, and multiple tradeoffs, all in that two-to-three-hour time. I think that is what empowers engineers to prototype much more quickly using Quilter.

Quilter wants to make the process of going from schematic capture to a manufacturable board as fast and automated as possible.

MB: Let’s talk a bit about the intelligence part of the AI. How does Quilter learn?

SN: The way that we approach this problem is by using a small subfield of AI called reinforcement learning. Most people will have heard of this technique from learning about how DeepMind defeated the world champion at Go about five years ago now, and the idea there was that they created an implementation of the game Go within a computer and then they taught the AI to sit on both sides of the board and basically play against itself. Then whenever the neural network won a game, it would be changed in such a way as to make those moves more probable, and whenever it lost a game, it would change in such a way that would make those moves less probable. After hundreds of millions of games, the neural networks found strategies for playing the game of Go much better than the human, and famously, of course, defeated the world champion.

That’s very much how we approach this problem. A lot of people think that we would have taken a whole bunch of human-designed boards and learned on them or something like that. I think that’s a mistake for a number of reasons, even if you were able to get that to work well. What we think of it as is that the neural network and other algorithms are playing this game of layout. Where do I put the components? What stackup do I choose? How do I connect everything? Then, once we have that kind of candidate locked in, we can then run a bunch of physics and heuristic evaluations to figure out basically, is this board going to work, yes or no, and if it’s going to work, the agent becomes more probable to make a design like that, and if it doesn’t, then it won’t, and so it’s really playing the game of layout being graded by physics.

MB: I want to yank on that thread where you noted that you think it’s a mistake to try to take the human decision-making process and apply it. What is it about that that you feel is misguided?

SN: There’s two main problems with it. And that’s not to say that humans haven’t developed great strategies; they surely have and many of those are useful as shortcuts in this process. Many things that we’ll do will look kind of human because we take inspiration. But from a first principles perspective, the biggest reason that I think it’s not a good idea to build a company on learning on existing board designs is that humans aren’t necessarily the greatest at designing circuit boards. Many boards fail to function on their first bringup, many boards fail EMI on their first test, so already you have an issue that you are learning from a corpus of data where mistakes are probable. The second issue is that humans have to reserve a lot of margin. If you’re looking at a board that’s going to take you a month to do in layout, your worst-case scenario is coming up against that deadline, finding out that you couldn’t quite fit everything; the components don’t fit, or the routes don’t fit, or you have to make it bigger or change the stackup, and all of a sudden you have to undo and add two or three more weeks of work. To avoid that, humans will start with a board that’s a little bit too big for what’s strictly necessary, a little bit too many layers for what’s strictly necessary, with more margin on clearance and trace widths and separations than strictly necessary, just to make sure that it works and is on time the first time around.

If you learn from that dataset, that’s the best you’ll ever do, whereas if you learn from first principles physics, you can eventually make boards that are better than humans can. You can eventually eliminate layers, you can make it smaller, you can make it more performing. You can violate some of the rules that humans have off-the-cuff but that the physics says is actually going to work and pass. The potential to make boards that are better than what humans can do is the main reason for me.

The second reason is simply that there’s not a lot of good open-source designs. The best boards are locked behind key at Apple or SpaceX or whatever, and they won’t want anybody training on those boards, and even if you could collect them all there’s maybe thousands or tens of thousands of them, which is typically not enough to train machine learning systems. Those are the two main reasons that we go after reinforcement learning rather than supervised learning.

MB: One of the things that appears that’s really differentiating you, I think, if you go and look at many of the AI-based platforms that use actual designs as teaching tools, and what you’re saying is that it’s not worth the effort to try to extract from the design what the intent of the designer is, because even if you could do that, that intent might be fundamentally flawed.

SN: I think it’s a matter of what level of intent you’re talking about. It’s very critical for us and for anybody else to understand that the designer expects a 4A current on a certain net or 2GHz impedance-controlled signal or whatever else. That’s really important. You have to respect that rule, you have to verify with physics that you did it. Otherwise, the board just simply won’t work. But I think the mistake that most other AI approaches take is that they are looking at the fact that the designer followed, say, the “three widths” rule for isolating crosstalk or something like that, and they’re optimizing for making designs to just replicate that and you’re effectively replicating a heuristic that humans have found really solve the real problem, which is “I want to make sure my cross-stack has good enough isolation between these two pairs.”

MB: Obviously, it’s one thing to say that a net is functional. But it’s another thing to say that this is actually a buildable design. Because when we talk about whether something can work, as you noted earlier, it all comes down to the physics of the manufacturing plant – their ability to actually make it in a way that it’s functional. So how do you determine if the board itself is manufacturable? Do you try to train on the constraints of the fabricator, as well?

SN: One of the convenient things in reinforcement learning is that you can explicitly force constraints onto your agent that cannot be violated. I’ll give a really simple example. Very obviously, you can’t have two components colliding on a board because then you couldn’t solder them and they couldn’t work. Instead of allowing the neural network to place components in such a way that they’re colliding and letting it learn that they shouldn’t collide, in reinforcement learning you can simply make it impossible for them to collide. And that’s convenient because then you don’t have to spend all that time for the network to learn that very, very basic fact. The same thing is possible with the manufacturing tolerances.

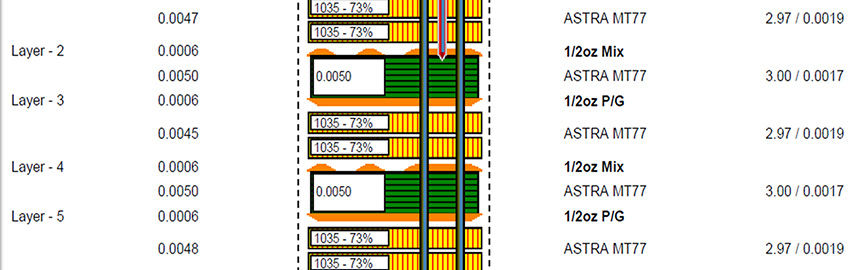

The way that we approach this is within Quilter, there are currently about 18 or 20 different combinations of stackup design rules that are sourced for manufacturers, and different manufacturers of course have different tolerances. So those constraints are hard constraints that the agent must respect. Quilter is effectively trying a generic stackup, something that’s like four layers, 10-mil traces, 10-mil spacing – something that basically any manufacturer can do, and if that works, fantastic, but if your board has very, very small components, very small pins, that’s not going to work, so then Quilter tries everything down to like a 3-mil trace with 3-mil clearance that only some manufacturers are confident in doing, and then we’ll identify that for this board, you’re going to need this manufacturer that can handle that kind of tolerance. Instead of training on those constraints, we just take them as actual constraints.

MB: So then if somebody wanted to do package-on-package?

SN: That’s not something that we’ve considered to this point, to be honest.

MB: I’m just thinking in terms of the example of two components colliding, but your point is well taken. I think that the bigger question that I have is simply that as the manufacturing capabilities change, how do you ensure that the platform itself stays up to date?

SN: For us, that literally looks like a laundry list of what are all the manufacturers we’re aware of, what are their tolerances, and frankly as manufacturers improve their tolerances and can support smaller features with lower clearances, that’s music to my ears. That makes the combinator problem much easier and then we are more successful with more boards. For us, keeping up with manufacturers is just a matter of adding another entry to a database that says this new manufacturer exists or this new capability exists with this new kind of stackup and these new tolerances.

MB: I really do see a difference in terms of the way that you’re fundamentally approaching this versus some of the other AI-based tools out there, either by startups or even by some of the very large ECAD companies. Let’s talk about the company itself for a moment. How many staff do you have and what are their roles?

SN: As you mentioned, we’ve been doing this for a few years now, and until now we’ve been mostly in R&D mode. I think most people don’t appreciate that this is really an ancient problem. The first papers I’ve ever seen of people doing actually placement and routing at the same time in an algorithmic way date back to the early ’60s, so people have been researching this for six decades without really a practical solution in the market. So just to acknowledge, this is very, very difficult and we fully expect that, and we’ve built a company in that way. Between full-time and part-time and contractors and everybody involved, I think we’re about 18 or so right now. The vast majority of that is engineering, so we’ve obviously got a lot of folks on what we think of as the compiler; all the algorithms and modeling required to represent boards, to manipulate boards, to input them from Altium, export them to Altium, even classic algorithms that do some amount of exploration in the design space, and of course reinforcement learning folks who do the training of models where that’s appropriate. We have a team that’s focused on the application itself, so that’s the website, the servers, ingesting files, talking with the user, validating the input files, things of that nature. And we’re starting to build up a product and design function as we’re reaching from pure R&D into serving users.

MB: In your past role at SpaceX, you were an electronics engineer. Were you responsible for board design in any way?

SN: Not for flight boards, but for test boards. In particular, most of my time there was spent on radiation effects, basically ionizing radiation, “please don’t kill the rocket.” And most of that work is around good parts choices, schematic choices, subschematic, subsystems, redundancy, things of that nature, and I built a fair amount of boards myself to support that kind of testing and had input on the design of the actual flight boards to make sure their reliability was sufficient to survive flight.

MB: Was there a Eureka moment when you said, “Hey, look I want to go off on my own and create this product because it’s a problem that I see us running into here and I hear from other companies about the same?”

SN: Ironically, the Eureka moment came five years before I left. I sat down to do my first board and was learning Altium for the first time, and I asked myself, “Why am I doing this? A computer should do this.” I tried an autorouter, the autorouter failed, and I said, “OK, I guess I have to do this.” I built the board, got it reviewed by a few other senior engineers to make sure it was all okay, got it brought up, and it caught on fire in my hands.

I then just took it as fact that this is how PCB design goes and I better get better at it, and it wasn’t until four or five years later that I saw that it’s possible for me to start a company and raise venture capital and all these things, and I married that with, what did I really suffer from in my job? What is the big bottleneck that I saw within our departments? And those two came together in the “Alright, let’s start this” moment.

MB: How do you go from that to neural networks and machine learning? Did you take classes in coding?

SN: My undergrad was all in the sciences. I triple majored in math, physics and chemistry just to get a really good base for basic understanding. At SpaceX, a lot of my job was programming because I had to do a lot of statistics. So it wasn’t enough to just screen parts and see how they do in radiation environments; I had to ultimately be accountable to the customer, in particular, the biggest ones at time were the US Air Force and NASA, that their payloads were safe with us. And I’d have to figure out from all the different inputs of the entire environment and the entire rocket, what is the final kind of probability of success that we’re accountable for? That was a lot of statistics and simulations. Around that time, 2015 or 2016, early neural network applications were starting to come up, and it kind of became self-evident to me that that was going to be important. I just took my own time on the side to learn all the math behind neural networks and back propagation, and write it out from scratch, and I kept playing with them for the next six or seven years before getting to Quilter and being here. So partially self-taught, partially school, partially experience on the job.

MB: A triple major? You kept your advisor pretty busy, I guess.

SN: Yeah, I had to go to three different advisors to make sure that I could complete all three majors. That was fun.

MB: You announced a $10 million funding earlier this year. Can you share details about the company valuation?

SN: We haven’t made the valuation public yet.

MB: Do you feel pressure to succeed given that kind of funding?

SN: Honestly, I personally feel pressure from all directions. I started the company because I wanted to see this work and so I feel accountable to myself to make it happen. Of course, the investors who were willing and brave enough to bet on us, and there’s many other than the Series A round. We did a few rounds before that, and so I want to do right by them. Most importantly, probably, are my family and my employees. We’ve got dozens of people here who are supporting us and it’s not just the people working at the company who have to carry the burden and take a risk. It’s all our families as well that have to support us, and that’s very, very meaningful. At the end of the day, working at a startup is much harder in a lot of ways, it takes a lot more hours, takes a lot more time, and typically for less pay, unless it actually works out. So I really appreciate the risk that everybody’s taking with us and that’s what gets me up every morning bright and early to make it happen.

MB: There’s a lot of a lot riding on all of this and I keep going back to this idea that the surveys that we’ve done suggest that maybe only between 5% and maybe about 20% of boards are autorouted at all. Most of them are hand routed and hundreds of thousands of hours each year are spent doing everything manually. Congratulations for convincing others of your vision and getting this far.

Let’s talk a bit about your roadmap. If we talk two years down the road from now, where will you be at that point?

SN: I roughly view our core technical compiler roadmap on the same two axes identified as the complexity earlier on. One is just combinatorics and solving boards and the second is physics. As we improve the geometry engine and the exploration of different geometries of the boards, that’s what eventually allows us to go from 300, 400, 500 components and 1,000-2,000 pins to eventually tens of thousands of pins and eventually hopefully the big monster boards and the networking applications that are hundreds of thousands of pins. That’s one thing that I will obviously be pushing.

Simultaneously, it’s also very critical for us to push on the physics side. I’ll give an example. Today, if you log into Quilter, you’ll only see support for the very basic thermal considerations of currents. Within the next four or six to eight weeks, we’re hoping to push support for impedance control and differential pairs, which gives us ability to do some more high-speed – specifically high-speed digital is what we’re thinking about the most right now. Of course, over time we have to add support for things like switching converters and power supplies and antennas, analog circuits, ESD, and so on and so forth. I hope that within two years we’re talking about some very serious boards. Some boards that are FPGAs or CPUs with DDR4, that would have taken a human a month or a month and a half to do, that we’re able to do fully autonomously the physics guarantees that the user would need to trust the layouts that hopefully work on the first try. Maybe we’ll have some demonstration sooner of that, and that’s certainly my hope, but we’ll see.

MB: What does the typical user look like today and are you able to aggregate any of the data based on how they’re using the platform?

SN: To be very clear, we don’t train from human boards. Anybody submitting boards to us, that’s not the input that we take, because people are obviously very concerned with the privacy of their boards and the fact their boards aren’t being reused for other vendors. So that’s very important to state.

For the kind of users we see right now, we’re in an open beta for a reason, we welcome everyone to come try it out. It’s not going to be a great application for everyone yet, just because we can’t do a lot of things that we want to do, but we see everybody from people actually trying to do serious big board designs that are thousands of pins to people that are adjacent. Think about firmware engineers, mechanical engineers, test engineers who need a design to de-risk and work on their prototypes but maybe aren’t building the flight design or the main production design that’s going to go into a big product. And of course, all the way down to hobbyists. I saw some keyboards come through in the last few days, I’ve seen some basic radios and things of that nature, lots of Raspberry Pi and Arduino applications, which are fun.

But I think today where the product is the most sticky is within what I think of as supporting boards. If you think a SpaceX flight computer, we can’t do it reliably yet. The person designing that, we can’t really help. But if you think about a person who is de-risking some of the components on it and is doing environmental testing and building boards for that use case, or somebody who’s building a bed-of-nails for that board, or somebody who’s building a test track for that board, those people we can help and save significant time. That’s where we see the best applications so far.

MB: Tell me about the environment. Right now it’s cloud-based. Do you anticipate that this will ever be available on the desktop?

SN: That’s a tough question. The desktop is unfortunately much more constrained than cloud for compute resources. As you might imagine, we drastically benefit from having access to thousands of CPUs and tens or hundreds of GPUs. So the product would have to be very sophisticated to fit all the computing you need into a single desktop. I wouldn’t rule it out, but what I think is more likely to happen, if we got to the point where we could be efficient enough for a single computer, is that we would then still be on the cloud but then using all that extra compute to make boards that are actually better than human. If we could take a high production run of a million boards and save a dollar per board in manufacturing, that would be very, very significant and worth spending the compute on. I suspect that is more likely where things will end up. To answer your question briefly, we don’t currently expect a single desktop application.

MB: You did mention earlier about working with different fabricators, and I want to point out one of the incentives that Quilter recently added is a PCB ordering service which its users can leverage for free prototypes of their AI-designed circuit boards. Tell me more about this Fab for Free program.

SN: Obviously as an early-stage company with something that is so difficult, we are very incentivized to learn. We want to get as many people as possible creating designs, manufacturing those designs, telling us how it went, giving us feedback for what to build better, and so we’re supporting that. For professional use cases where people have their fabricators and contracts already set up, this is obviously probably not that helpful, but for people who are running a board as a hobbyist or at home, we don’t want fab to be a barrier to trying it out, especially if you’re willing to talk with us about it or share it with other users of Quilter and let us publish a blog about it or something like that. We’re happy to find the build of the PCBA and to work with you on it.

MB: Which fabricators are you working with for those?

SN: So far, we are tailored to produce files for JLC PCB, OSH Park and PCBWay, but in general, we also run compilations for rules that basically any fab should be able to handle for simpler designs, and so I wouldn’t rule out any of the others either.

MB: And you’re looking at this essentially like a loss leader in order to help learn more about developing your process?

SN: Yeah. I really welcome folks trying it out and just giving us good feedback. If you believe this is how things should work in five or 10 years, come help us make it so.

Mike Buetow is president of PCEA (pcea.net); This email address is being protected from spambots. You need JavaScript enabled to view it..