Higher performance requirements reduce timing margins on interfaces, thus imposing strict rules on board routing.

Interface performance depends on bit transfer rate (as driven by the frequency of a clock) and the protocol used to transfer command, addresses and data over the interface lines. Although there are some additional commands and functional improvements in the evolution of memory interfaces from SDR to DDR, the primary performance improvement on memory interfaces is due to technologies on increasing the bit transfer rate. This article focuses on bit transfer rate evolution over the generations of synchronous memories.

Theoretically, an interface’s maximum speed is determined by the IO buffers’ switching frequency, plus the receiver input buffers’ setup and hold time requirements. However, at specifically high frequencies, the signal integrity issues on the signals need to be resolved. Through board-level simulations, board design (routing) rules are developed, also meeting timing requirements and signal quality requirements. Additionally, the routing guidelines have to be practical (easily implementable). These are the factors that lower the theoretical speed of the performance. So, then, how is the high-frequency operation achieved on memory interfaces?

Memory interfaces. The history of dynamic memories dates to the early 1970s, when computer manufacturers began offering large-size memory subsystems. With PCs becoming an integral part of daily life, demand for higher speed memories, as well as high density, has been rising: In every generation of a computing system, more memory at higher speeds is targeted. Dynamic memory devices were at first asynchronous. The system performance achievable was limited. With the advent of the synchronous memory devices, race for speed started, as the memory interface is one of the most significant parts of architectural components in a computer.

Two components make up a memory interface (like any other interface):

IO (input/output) buffer is the transistor circuit that drives and/or receives bits, digital information, on an interface. The switching speed of the transistors, based on the silicon technology used, determines the maximum (at least theoretical) speed achievable on the interface. The IO buffer technology determines voltage levels of a signal on the interface, as well as the timing with respect to the synchronizing signal; for example, data (or address or command or control) signal valid time with respect to clock or strobe from a driving IO buffer, and setup and hold times of data signals with respect to clock or strobe required by the receiving IO buffer. The strength of the IO buffer is the primary factor in driving a large number of memory devices connected on the interface.

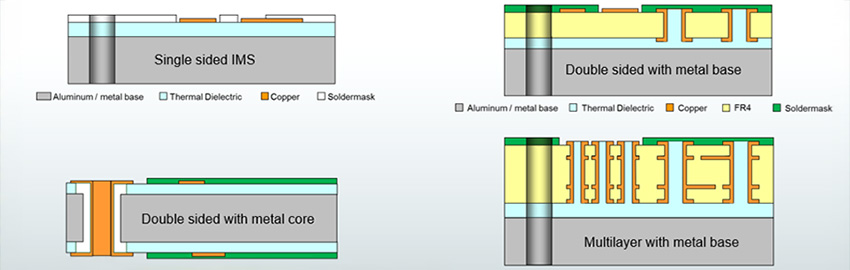

An interconnect is the trace (line) on a printed circuit board that connects the IO buffers of ICs, forming the hardware driving and receiving functions for a logical operation (write or read).

The swing amplitude of a signal, as required by the silicon technology, representing digital information is the voltage levels of high-to-low (or low-to-high) transitions of the waveform, hence determining the speed over the interface.

Additionally, the behavior (reflections, ringing, etc.) of the interconnect signal is just another factor that affects the interface operation frequency.

First-generation synchronous memories became widely used in the early 1990s. They now are also known as SDR (single data rate) SDRAMs. A typical SDRAM datasheet is in Reference 1.1 An SDR memory channel is composed of address, command, control and data lines, and is a common-clock interface in terms of the way the clock is connected to the components.

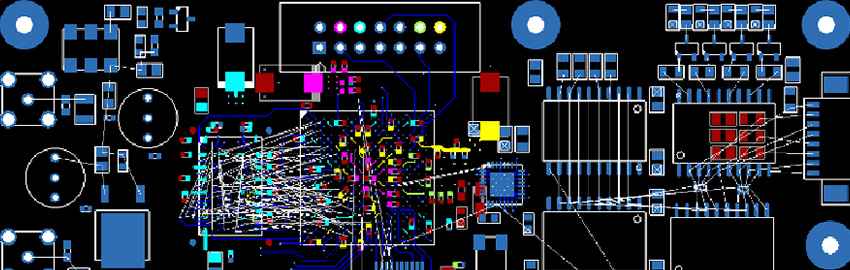

Figure 1 shows a typical implementation of a SDR memory interface. The data bus width determines the number of devices connected in one rank. The targeted total memory size is limited by the number of chip-select control signals determining the number of ranks in the system.

Typical clock rates were 66, 100 and 133 MHz; and 155 MHz devices existed, but resulted in small-size memory implementations. Memory modules were standardized into 168-pin DIMMs.

The memory devices may be on a DIMM; the routing on each DIMM type is different from each other. The memory implementation in a system can be device-down. (Memory devices are all on the main board – no connectors, no memory modules.) That is a challenge by itself in terms of placement and routing in and out of the devices – and highly likely ending with multiple different types of topologies for each signal and pin connection.

Routing signals to the devices was constrained by signal-quality issues, limiting the size of the memory that could be implemented for a system, and presented difficulties in device placement with respect to the memory controller.

To correct signal quality issues, series or parallel termination resistors often were used, but increased cost and, in most cases, made routing more challenging.

The number of ranks determines the number of devices per data signal, while the total number of devices fixes the number of loads for each address and command signal.

To achieve higher speeds on the interface, two issues needed to be resolved:

- The speed on an SDR memory interface is limited by the clock cycle time (tCYC): A signal (data, address, command or control) would be valid at the driver output (of the memory controller) after a delay from the clock and would travel over an interconnect, arriving at the receiving memory devices, meeting setup time requirement of the input buffers. That determines the allowed maximum flight time over the interconnect, which gets smaller as clock frequency goes up; however, any clock routing skew coupled with clock driver pin-to-pin skew and jitter will result in even smaller allowed flight times, hence shorter trace lengths.

- The amount of load on a data signal is considerably lower than the load on an address signal. A maximum and a minimum trace length can be determined (through simulations) for correct interface operation; however, at higher speeds, the difference between the maximum and minimum length (trace length mismatch) would be smaller. Coupled with already small trace lengths at higher speeds, it is difficult to route so many short traces in a small area (imposed by placement of the components) and still meet the trace length mismatch requirement (as determined by the minimum and maximum trace length difference).

These issues were resolved by moving to DDR memory interface, which became common in the early 2000s. Figure 2 shows a typical implementation of a DDR memory channel.

Main features added to the memory interface2 are summarized below:

1. By changing the interface operation to source synchronous, the interface speed would be limited only by the skew between the clock and other signals, which still requires tight control in routing, but is easier to achieve. Flight time constraint due to common-clock cycle-time was removed.

2. Clock signal was changed to differential signaling, providing a more stable timing signal; address, command and control signals were timed with the differential clock, which captured signals at the crossing point (of the positive and negative components of the signal).

3. For IO buffers, 2.5V SSTL (stub series terminated logic) circuit technology3 was used, effectively reducing the swing amplitude of the signals to 2.5 volts. Input buffer switching occurred at Vref point (0.5xVDDQ = 1.25v) of the input waveform transition; by that, the delay to reach the threshold voltage levels was removed, which changed depending on the load.

4. One issue with source synchronous interfaces is that the distance from driver to receiver is not known, as there is no trace length (flight time) limiter (like tCYC as in common-clock interfaces). This is not an issue for a write operation when driving the data bus from controller to the memory devices; however, it is an issue during a read operation when memory devices are driving the bus and the memory controller needs to know when exactly to sample the data bus. To resolve the issue, a strobe signal was created. As it exists, the strobe signal was used for both write and read operations. The data signals were synchronized with the strobe signal (one per byte group in general) that has the same amount of load as the data signals. This made routing of the data signals and strobe signal easy to match to each other within a byte group.

5. With a stable differential clock and a strobe signal dedicated to each byte group, data transfer was accomplished on rising and falling edges of the strobe signal, hence doubling the data rate. Clock speeds were implemented from 133MHz to 200MHz, corresponding to data rates 266MT/s to 400MT/s.

6. VIH(ac) and VIH(dc) (similarly VIL(ac) and VIL(dc)) threshold levels2 were introduced), so some ringing (with amplitude between ac and dc threshold levels) on the interconnect may be tolerated without affecting the estimated/simulated flight times.

Issues with DDR. While the fact that the strobe signal trace length was to be matched to data line lengths within the same byte group provided some flexibility in routing across byte groups for high speeds, the strobe signals also had to maintain a tight timing relationship (tDQSCK, tDQSS, tDSS and tDSH)2 with the differential clock, which in turn limited the flexibility. Also, the strobe signals were single-ended, and at high-speeds, tighter length matching was required due to performance-limiting effects of any noise on the signals (possibly crosstalk).

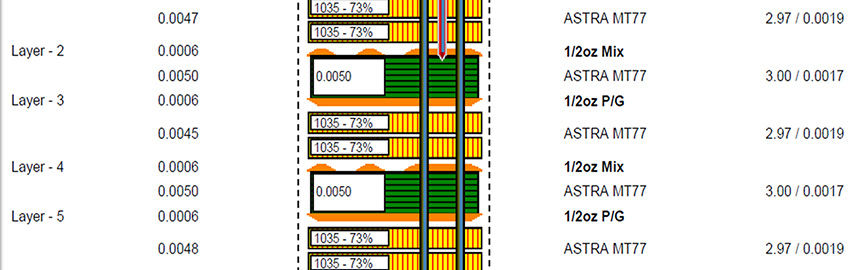

For even higher performance on the memory interfaces, DDR24 was introduced in 2003 with speeds at 400MT/s and 533MT/s, and then 800MT/s and recently up to 1066MT/s. Memory modules (DIMMs) with 240-pin connectors were supplied. SODIMMs with tighter routing for laptop and embedded applications were designed. Figure 3 describes a typical DDR2 memory channel.

DDR2 interface designs are geared toward easing challenges experienced on DDR memory interfaces.

1. Lowering the swing voltage to 1.8V enabled the silicon process to higher-speed IO buffer technologies.5 That also provided some power reduction highly favored in high-speed applications.

2. Moving to a differential signaling for a strobe provided a more noise-immune timing signal for data lines.

3. OCD (off-chip driver) impedance adjustment feature (though optional)4 was created for the memory devices to drive DQ and DQS signals with an impedance matching the characteristic impedance of the interconnect.

4. Similarly, DDR2 IO buffers are programmable to function in full strength and reduced strength programmable based on the load. This feature can be used to reduce reflections on an interconnect with a light load.

5. ODT (on-die termination) is another feature included in DDR2 memory devices, helping data interconnect signal quality. A couple of pull-up termination values were made available, 60Ω and 120Ω, similarly programmable by the controller. ODT feature is possibly as good as on-board termination resistors, but it standardizes and optimizes, under BIOS control, the interconnect termination value for signal integrity, based on the load – amount of memory on the board – for example, the difference between using a single DIMM or two DIMMs on a two-connector memory interface. As such, ODT achieves more, saving routing and placement space needed for the on-board termination resistors.

6. A timing relationship is specified for the differential clock, address and command lines. The number of loads on the clock, address, command and command signals is the same and can be large, depending on the number of ranks in the interface. The clock signal can be easily duplicated on the controller, effectively reducing the load per clock; furthermore, the control signals need to be one per rank; hence the load on the control signals would be equal to the load on the clock signals. However, a duplication on the address and command lines would be prohibitively costly for a controller needing many additional pins on the package. Instead, 1T and 2T timing can be implemented, where 2T timing for heavily loaded interfaces provided additional time for the controller to meet the setup and hold times on the memory devices.

While 1T timing is the standard memory interface specification, 2T allows more time for address and command signals to travel over the interconnect and still meet the timing requirements on the target devices (Figure 4).

7. Additionally, further relaxation was obtained in routing the signals by introducing circuits that delay the clock signal with respect to address, command under program control. This proved to be a viable solution in memory controller designs to practical memory interface routing at higher speeds.

On interfaces where the design ends up with routing difficulties to meet length mismatch requirement between the clocks, address and command signals, a controller with a delay feature can provide the required delays of the clock signal with respect to address and command, and therefore, meet the flight-time-matching requirements; although, for example, the clock trace length may have been routed considerably shorter than other signals.

Similarly, the controller can implement delays between the clock and each byte group strobes, individually adjusting the strobe flight times with respect to the clock, so that timing relationship between the clock and each strobe can be met independently of each other, hence flexibility in routing of the data byte groups. Data signal flight times within each byte group would still be matched (within the design spec as determined by simulations) to the respective strobe trace lengths, meeting the device data input specifications. Signal flight time matching mechanism is shown in Figure 5.

Issues with DDR2 interfaces. Routing of DQ and DQS signals within a byte group should be done to meet the device-timing specifications. There is nothing that would help simplify the routing of the byte groups, requiring tighter length matching at higher speeds. Also, stepping resolution in the delay circuits would determine how well the adjustment of the delays can be performed to match the delays determined by board-level simulations. With finer resolution circuits, delays for the signals can be achieved as close to simulated values as possible.

The challenges in higher speed DDR2 interfaces – comprehensive simulations determining the routing rules, which end up being difficult to implement, resulting in high design costs – have led to advances in DDR3 memory interfaces.

The DDR3 memory interface6 is identical to DDR2 in terms of the number and types of signals, as well as several other features, i.e., ODT. The voltage was reduced to 1.5V from 1.8V in DDR2, reducing the power requirements, and also the swing amplitude, helping increase maximum frequency that can be achieved. The IO drivers were designed to have low output impedance, Ron (IO buffer on-resistance) programmable to either 34 or 40Ω, helping to improve signal quality by requiring low interconnect characteristic impedance. An interconnect with low characteristic impedance would need to be a wide trace. The frequency-dependent losses (due to skin-effect) would tend to be less on wider traces. Further, additional features are implemented in the memory devices and controller.

Figure 6 shows a UDIMM implementation of a DDR3 interface. Note the “fly-by topology” routing on the DIMM: Clock, address, command and control signals all go on the DIMM at the middle of the module and route to the left-most device (Device0 in the figure) first. Then the routing is sequentially completed to each device, eventually arriving at the right-most device (Device7), and ending with a termination that is on the DIMM. This provides several advantages to the interface implementation on the board, insofar as signal integrity:

- Fly-by topology routing prevents issues related to the “tree-topology” used in earlier memory modules – specifically, reflections and larger routing area on the board; all the signals, except DQ and DQS, can be routed to the DIMM in a central area, provided the memory controller ball-map was designed to easily allow for that.

- Fly-by topology routing makes it easier to achieve very short trace connections to memory device pins: This is to make sure any stub effect on the signal quality is minimized.

Fly-by topology is also used on SODIMMs, and can be used in memory-down implementations, making routing easier.

The fly-by topology, however, introduces one complication in the design: the required timing of the data, DQ and associated strobe signal, DQS. Due to sequential arrival times of the clock, address, command and control signals at the memory devices, the data transfers have to follow the same sequential order in time. For example, for a write operation, the left-most device would need to receive the Strobe0 and data signals (Byte0) in a timing relationship, as defined in the device datasheet. Through simulations, the length of the traces can be determined with respect to the clock routing. The timing of the Strobe1 and data signals, Byte1, would meet the same timing relationship, however, when clock is at device1; therefore, the memory controller has to know the delay from device 0 to device1. The delays have to be known to the controller up to the right-most device, and each device-to-device delay can be and is different from each other. The same issue is true for read operations as well. The memory controller resolves this issue by the so-called write and read leveling feature (commands) implemented in DDR3 memory devices.

The primary goal of write/read leveling is to adjust the skew (timing difference) between clock and strobe signal, such that the required relationship between the two signals is met (at the memory device for write and memory controller for read).

Though not specifically related to this discussion, the write leveling is done by the controller during interface initialization by driving and delaying the strobe signals until the memory device detects a 0-to-1 transition of the strobe and sends the transition status on the data lines to the controller, at which time the controller knows the amount of delay from one particular device to the next in the routing sequence. The controller uses this information to appropriately delay the strobe signals to be generated at the right times. Similarly, the read leveling is performed by the controller by first setting up the appropriate command register bits in the devices, and then the devices sending a predefined bit pattern (01010101….) on the data lines, along with the respective strobe signals. Once the controller captures the data from each byte group correctly, the controller determines the delays that it has to have for each strobe individually to perform the read operations correctly.

Note the delays determined as a result of leveling process include device-to-device delay, and the delay due to the data strobe trace length on the board. That ensures that strobe (along with the data) will arrive at a specific device when the clock is also there within a specified window. Therefore, the clock, address, command and control signals lengths to the first device are determined through simulations, and can be routed, including a mismatch that can be specified at that time between the clock trace-length and the trace-lengths for other signals. The trace-length of the strobe signal to the first device is also determined through simulations and routed within a mismatch window with respect to the clock, as specified in the datasheets. The trace lengths of other strobes would follow the same mismatch rules. However, larger length mismatches between any strobe signal and the clock can be achieved for the interface, depending on how large the amount of delay that delay registers can hold, upon completion of write-leveling. This implies the trace lengths of the byte groups can be largely different from each other, and provides high flexibility in board routing.

Figure 7 shows an example where the length of the Strobe0 is determined by simulations to have a delay of “dly0” from the clock. After a write-leveling operation, the controller determines the delays for other strobes, for example a delay of dly1 for Strobe 1 that may have a different trace length, and a delay of dly7 for Strobe7 that may be of a different length than all others. Note that with the delays, the strobe-clock window as specified in the datasheet is achieved.

The length of data signals within a byte group with the respective strobe signal should be determined through simulations and routed to meet the specified timing given in the data sheets.

The function of read-leveling is similar: Upon signaling from the controller, the memory devices start sending 0101 … pattern on all data signals, along with the corresponding strobe signals. The controller determines the delays required to receive each strobe and corresponding byte group when it reads the data pattern correctly during the leveling.

Note the finer the resolution of steps in the delay function, the better the alignment can be done, and the larger the mismatches that can be adjusted to meet the timing windows. Additionally, smart controllers can help achieve finer adjustments under BIOS control.

Issues with DDR3 interfaces. Routing DQ and DQS signals within a byte group should be done to meet the device timing specification that is more critical at higher speeds. This is the same issue as for DDR2. Also, the maximum difference between the lengths of the strobe signals depends on the timing window within which the delays can be adjusted with respect to the clock. The delay circuits need to have finer time-stepping capability, which increases the circuit complexity.

Other types of memory designs are also available. Registered DIMM (RDIMM) is one implementation where the address, command, control signals and clock are buffered by a register and a PLL on the memory module, effectively reducing the load to 1 per rank of memory devices for each signal. That certainly achieves good signal quality in many applications due to smaller and balanced loading on the signals. The data and strobe signal connections would be the same as for other design implementations.

However, the register(s) and clock PLL present an additional power requirement and cost in system implementations.

The other implementation is similar to RDIMM, the LRDIMM (Load-Reduced DIMM), where all interface signals are buffered, effectively achieving a single load per memory module on the channel, good for systems with high memory capacity.

Summary

The performance requirements in systems – specifically, the role of the memory interface in system performance – cannot be ignored. Higher performance requirements, in turn, reduce timing margins on interfaces, imposing strict rules on board routing. Additional creative features, aided by software, will be needed, and will continue to be included in the design of the future memory devices and memory channel controllers, to make the design and routing of the interconnects feasible, as well as the meeting of signal integrity requirements at high speeds.

References

1. Synchronous DRAM; http://download.micron.com/pdf/datasheets/dram/sdram/64MSDRAM.pdf.

2. Jedec JESD79F, Double Data Rate (DDR) SDRAM Specification.

3. JESD8-9B, Stub Series Terminated Logic for 2.5v (SSTL_2).

4. Jedec, JESD79-2E, DDR2 SDRAM Specification.

5. Jedec, JESD8-15A, Stub Series Terminated Logic for 1.8 V (SSTL_18).

6. Jedec, JESD79-3D, DDR3 SDRAM Specification.

Hal Katircioglu is manager, Platform Engineering Signal Integrity Group, ECG, at Intel Corp. (intel.com); This email address is being protected from spambots. You need JavaScript enabled to view it..